Playback Mode

Note

This feature is an experimental feature and is subject to change. Experimental features should not be used in production products and are unsupported. We are providing these experimental features as a preview of what might be coming in future releases. If you have feedback on an experimental feature post your feedback in the Lightship Developer Community

Note

Currently Playback Mode is supported on Intel and Apple Silicon Macs only, and not supported on Windows.

Playback is a new mode of Virtual Studio that lets you run your AR application inside the Unity Editor off of real AR data. This happens by “playing back” a dataset, which for ARDK 2.2 contains camera pose, frame images, and location data (optional). At runtime, ARDK’s context awareness algorithms are run using the dataset’s frames to surface camera, depth, semantics, and meshing data to the application. VPS localization (with Wayspot Anchor creation and restoration) is also supported in Playback mode.

Getting Datasets

Two example datasets can be downloaded here (showing the same Wayspot localization target from different angles):

https://storage.googleapis.com/nianticweb-epos-web-staging/gandhi_statue.tgz

https://storage.googleapis.com/nianticweb-epos-web-staging/gandhi_statue_peer_2.tgz

Otherwise, you can create your own datasets by building the scene at Assets/ARDKExamples/VirtualStudio/Playback/PlaybackDatasetCapture.unity onto a device. Press the Run AR button and then press the Start Recording button to start the capture, which will turn into a Stop Recording button that can be pressed to stop and save the capture. Once you’ve pressed Stop Recording, it can take some time to finish writing the dataset to your device, so press Toggle Log to show log messages and wait until you see “Successfully written archive” in the log before closing the scene.

Note

To use a dataset for VPS localization, the dataset both must have location capture enabled and have been recorded at a VPS-enabled wayspot.

When recording your own datasets, keep the following best practices in mind.

Walk slowly (when you want to examine how virtual content looks in AR, you’ll find slow and thorough recordings easier to use).

Record multiple sessions with various camera paths and angles, so you’ve got a variety of “player behavior” to test your application on.

Unarchived capture data is saved to a tmp_captures folder on your device and not automatically deleted when you close the scene. To clean up old capture data in tmp_captures, press Delete Files when running the scene.

The saved captures can be extracted onto a computer in the same way that saved meshes are extracted.

iOS

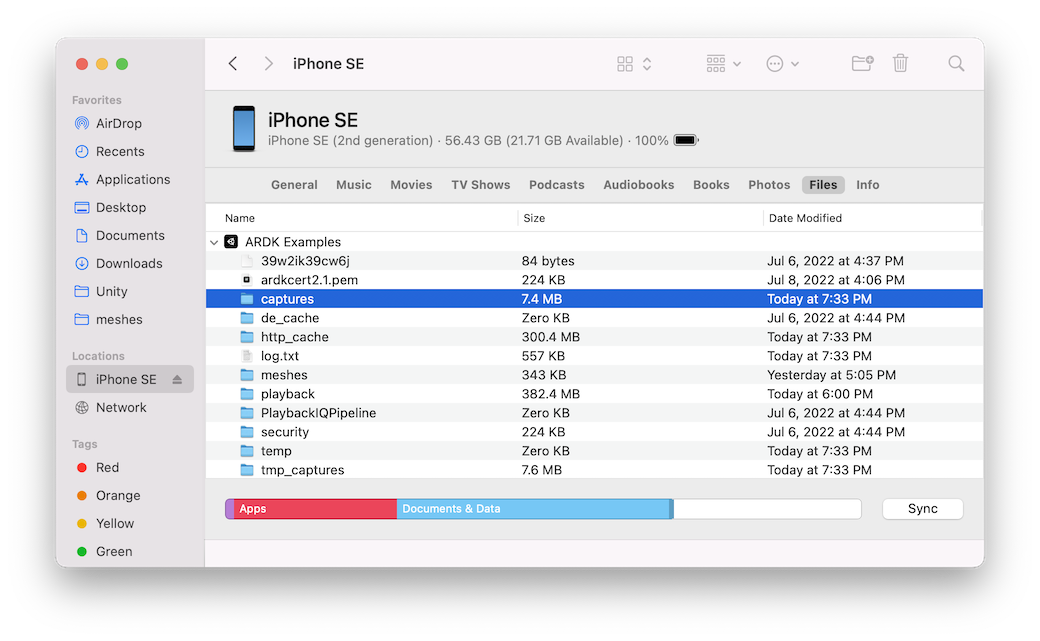

Connect your iPhone to your laptop via USB and use Finder (macOS Catalina & above) to browse to the phone’s files. Expand the ARDK Examples folder, and copy its captures folder to your local disk.

Android

Connect your Android phone to your laptop via USB and use Android File Transfer to browse the phone’s files.

You will find the files under Android/data/com.nianticlabs.ar.ardkexamples/files/captures, in multiple directories each representing a different capture.

Running Your Application in Playback Mode

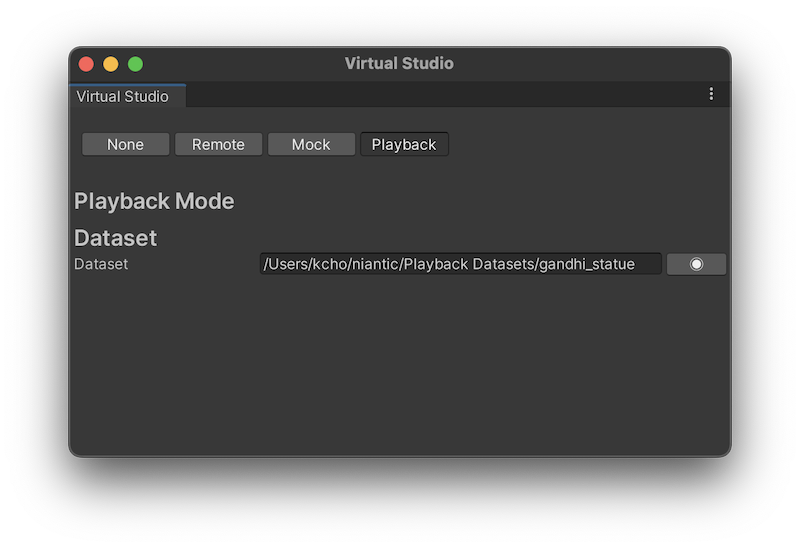

Open the Lightship > ARDK > Virtual Studio window and select the Playback tab.

Click the circle icon on the right to open up the directory selection dialog. Select the directory containing the capture files (it should have a

capture.jsonfile as well as manyframe_???.jpgfiles)

Open your AR scene and enter Play Mode.

Once

ARSession.Runis called, theARSessionrecorded in your dataset will start playing. Image buffers, depth buffers, semantic buffers, mesh data, and camera pose will be surfaced throughARFrameupdates identically as they were on device when you recorded the dataset.

Known Issues

Plane and image anchors are not currently supported (we’re working on it).

Exiting Play Mode while the Awareness model is being downloaded (occurs between when the

ARSessionstarts running and the first depth/semantic/mesh data is surfaced) will result in the Unity Editor freezing and/or crashing.Meshing configurations (block size, etc.) are not applied.